Technology Android

- Thread starter THEV1LL4N

- Start date

One of my co-workers has an insane Doogee phone with like an 8000mAH battery or something nuts like that. It's pretty thick, but it literally goes 4 full days without needing a charge.

Doogee is a knock-off of Xiaomi at the moment though. A Chinese-Spanish knock-off of another Chinese company that doesn't sell their phones globally yet is a whole new level. I've heard they're not bad though for what they are.

Last edited:

I often wonder why there is little variety in smartphone design. Is it because different phones don't sell, and everyone suddenly blended into liking the exact same things? I would like a change and take a slightly thicker phone if it meant a larger battery. All phones these days are a glass sandwich too, with some all metal. It looks like they ditched plastic, despite it having its own advantages in many models. I would like to go back to the times when phones specialized, and you could have a bad-ass camera phone, a walkman, a different form factor etc. I'd be the first in line for a new major Pureview Nokia-like Android phone, for instance, with a large camera sensor and a larger battery.

Doogee is a knock-off of Xiaomi at the moment though. A Chinese-Spanish knock-off of another Chinese company that doesn't sell their phones globally yet is a whole new level. I've heard they're not bad though for what they are.

Doogee is a knock-off of Xiaomi at the moment though. A Chinese-Spanish knock-off of another Chinese company that doesn't sell their phones globally yet is a whole new level. I've heard they're not bad though for what they are.

At least one of their phones is running 8.1. That's impressive. I'll have to look in to the bands their phones support and see if it supports Sprint's bands. I'm fine with my S7 but I'm seeing these phones on Amazon, sold by Doogee themselves, for ~$200. So long as the user experience isn't shitty, that's a bargain for a 9000 mAh battery with the latest OS.

https://www.tomshardware.com/news/intel-processors-lazyfp-speculative-execution,37302.html

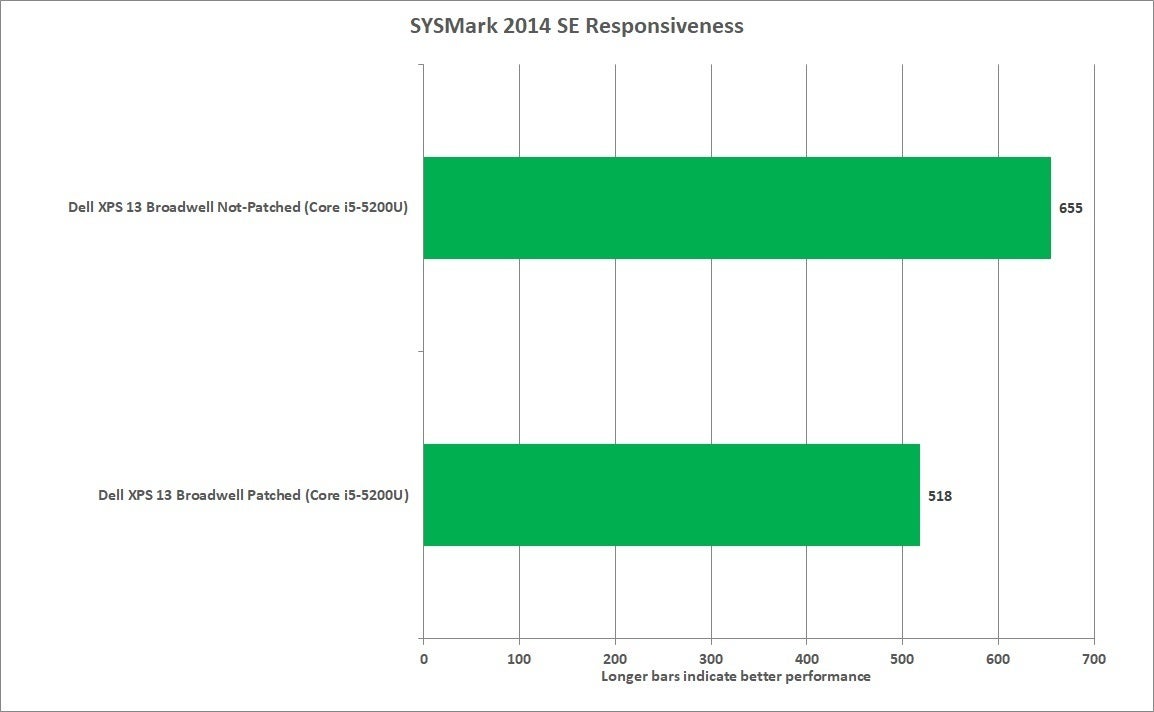

Only 2 out of 8 spectre variants went public and were ghetto-patched so far. So far each of the patches has slowed down the Intel CPUs. Skylake at this point was degraded to below pre-patched Sandy Bridge performance per clock. Yet most patches were not even applied yet, and most bugs were not yet announced due to Intel's pleads for time to patch them before that happens.

I'm surprised it's only popped up now, considering those vulnerabilities have been there since 2011, and I wonder when will Intel finally release a more secure architecture that actually isn't vulnerable to those things, as Coffee Lake is still just Sandy Bridge with tweaks and toothpaste as a coolant, instead of liquid metal.

Only 2 out of 8 spectre variants went public and were ghetto-patched so far. So far each of the patches has slowed down the Intel CPUs. Skylake at this point was degraded to below pre-patched Sandy Bridge performance per clock. Yet most patches were not even applied yet, and most bugs were not yet announced due to Intel's pleads for time to patch them before that happens.

I'm surprised it's only popped up now, considering those vulnerabilities have been there since 2011, and I wonder when will Intel finally release a more secure architecture that actually isn't vulnerable to those things, as Coffee Lake is still just Sandy Bridge with tweaks and toothpaste as a coolant, instead of liquid metal.

Could this be the reason why all the computers at work seem to have slowed down considerably in the last few months?

Usually office computers slow down thanks to IT techs who want to prove that they are needed by overcomplicating things and installing too much bloatware. Sometimes it takes several minutes just to fully launch such systems, especially if they aren't running on SSDs. You'd even find some great cases of computers running more than 1 anti-virus software, which kills any life that the computer once had in it. Now that Windows has a built-in anti-virus, that is basically any other anti-virus installed on top of it.

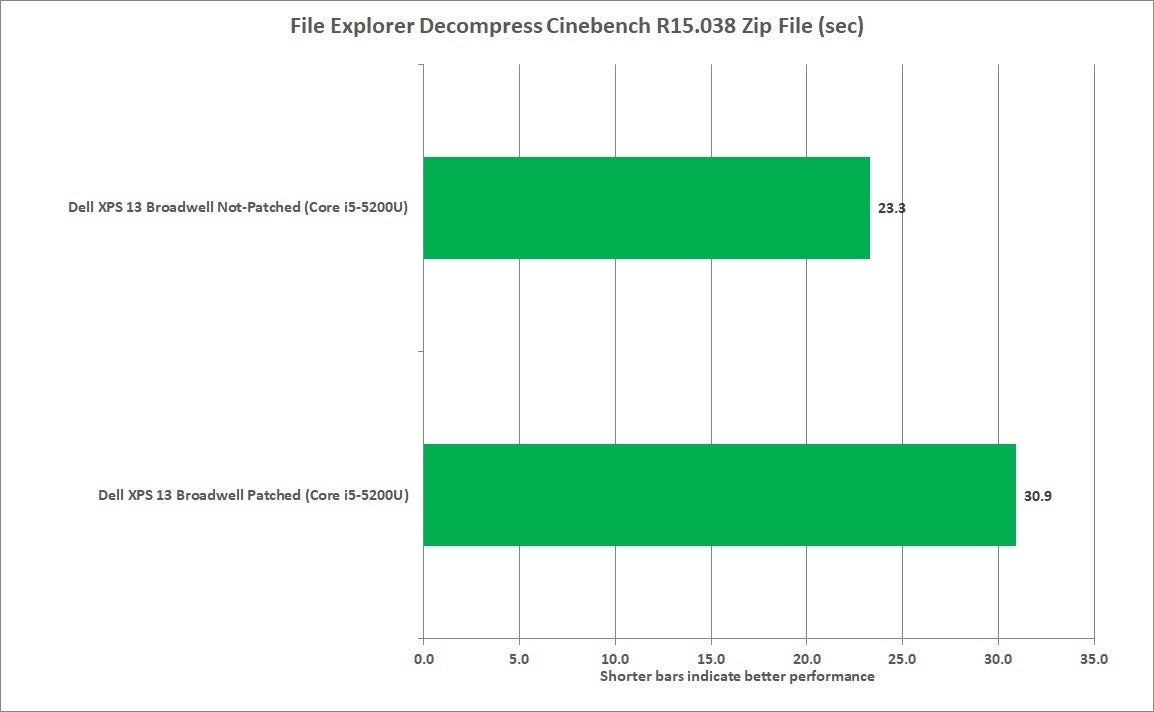

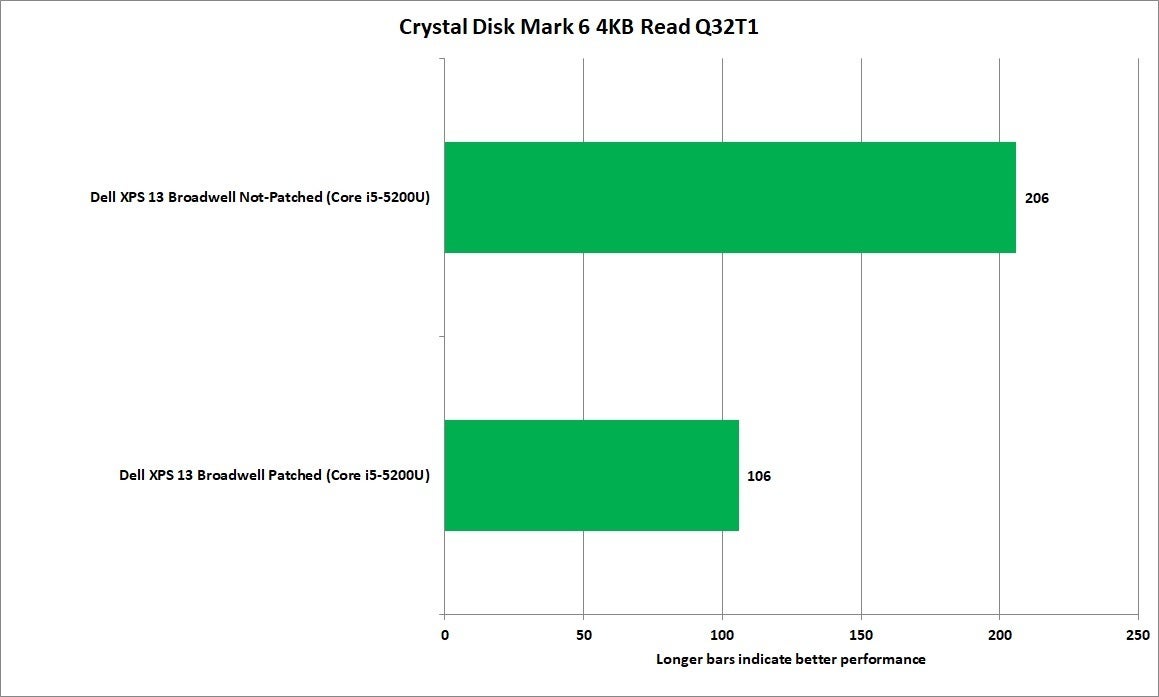

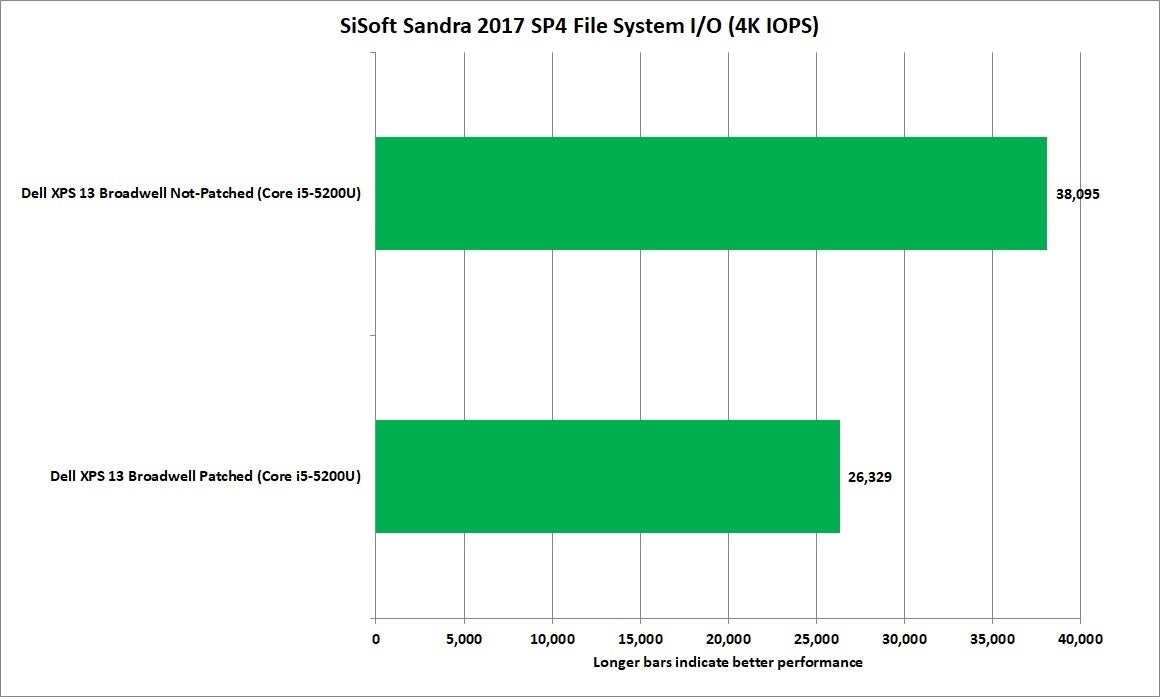

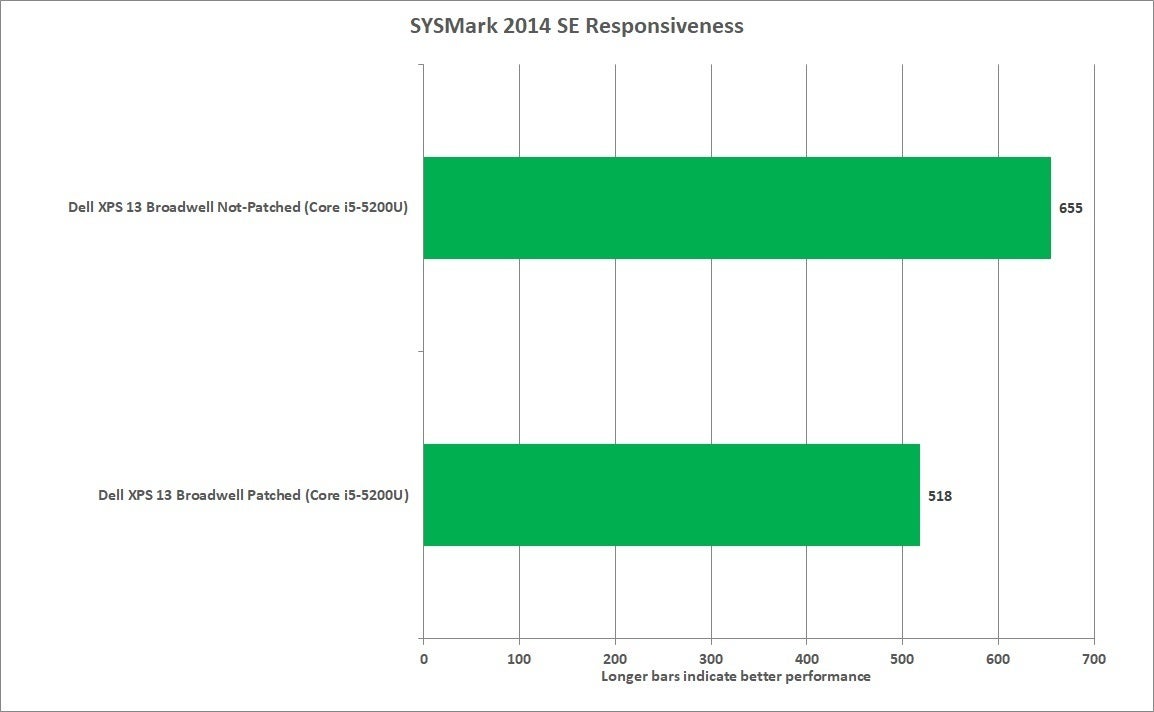

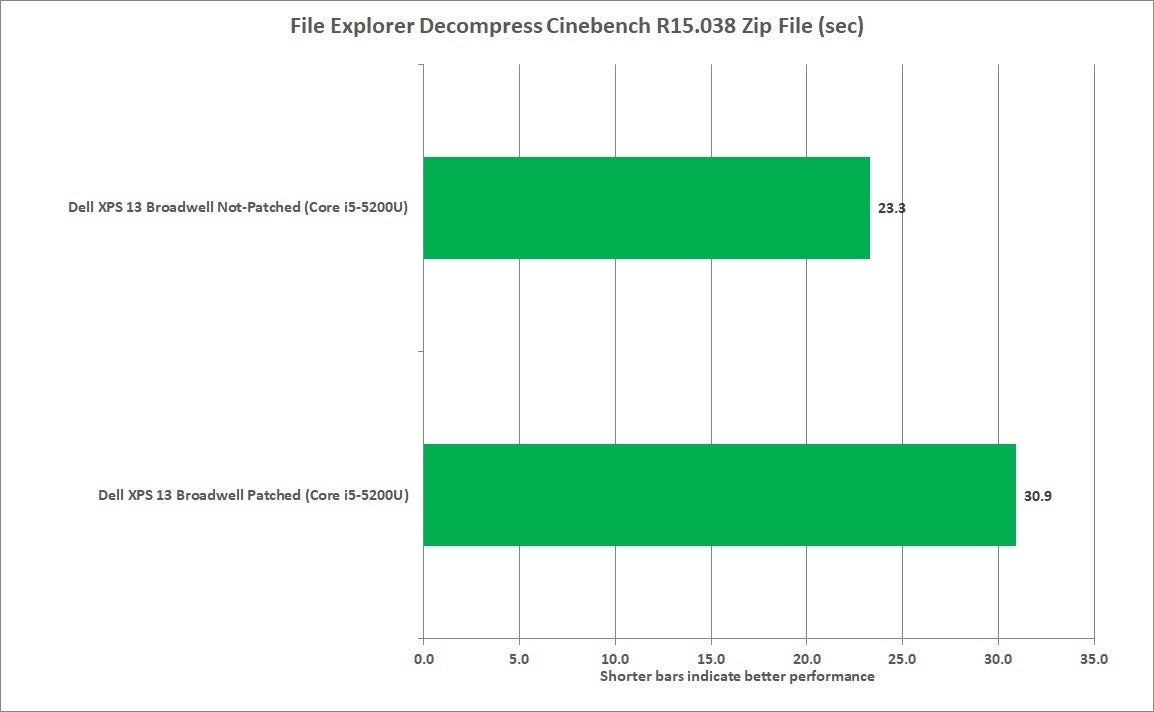

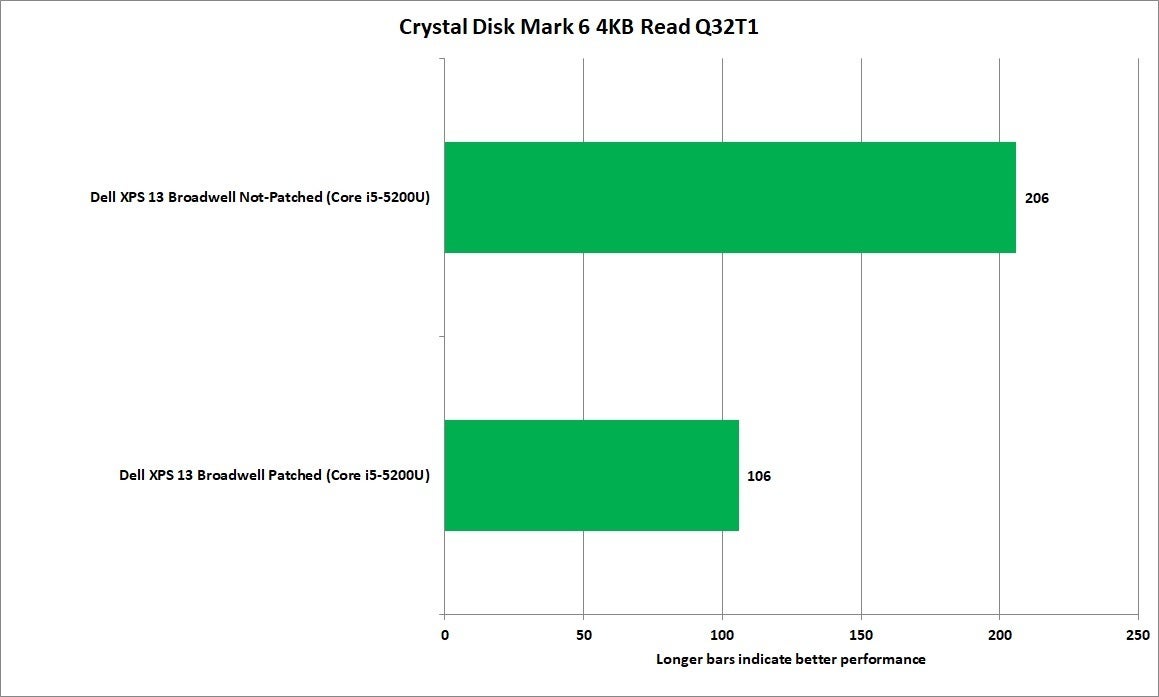

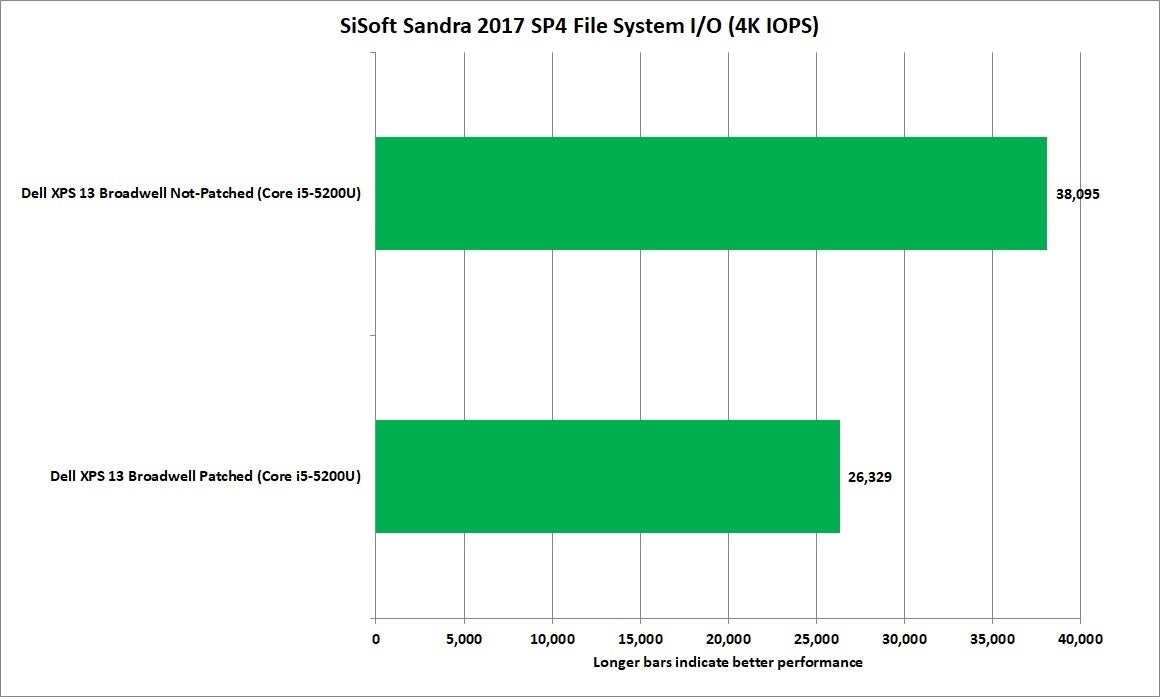

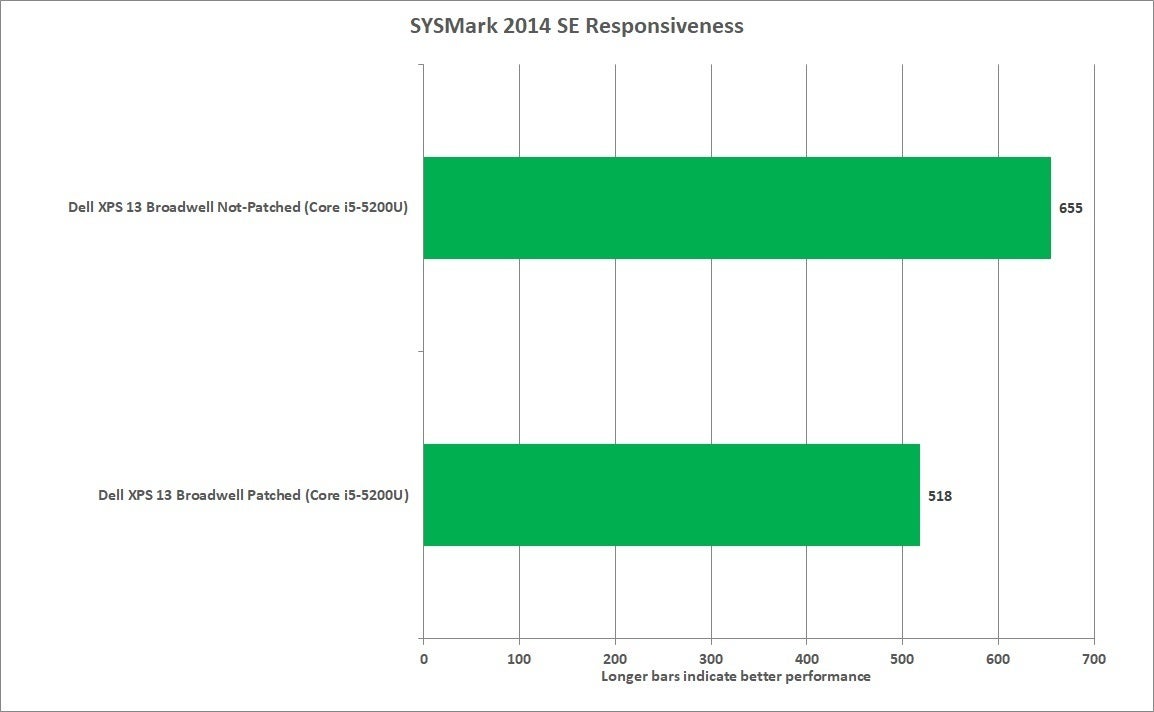

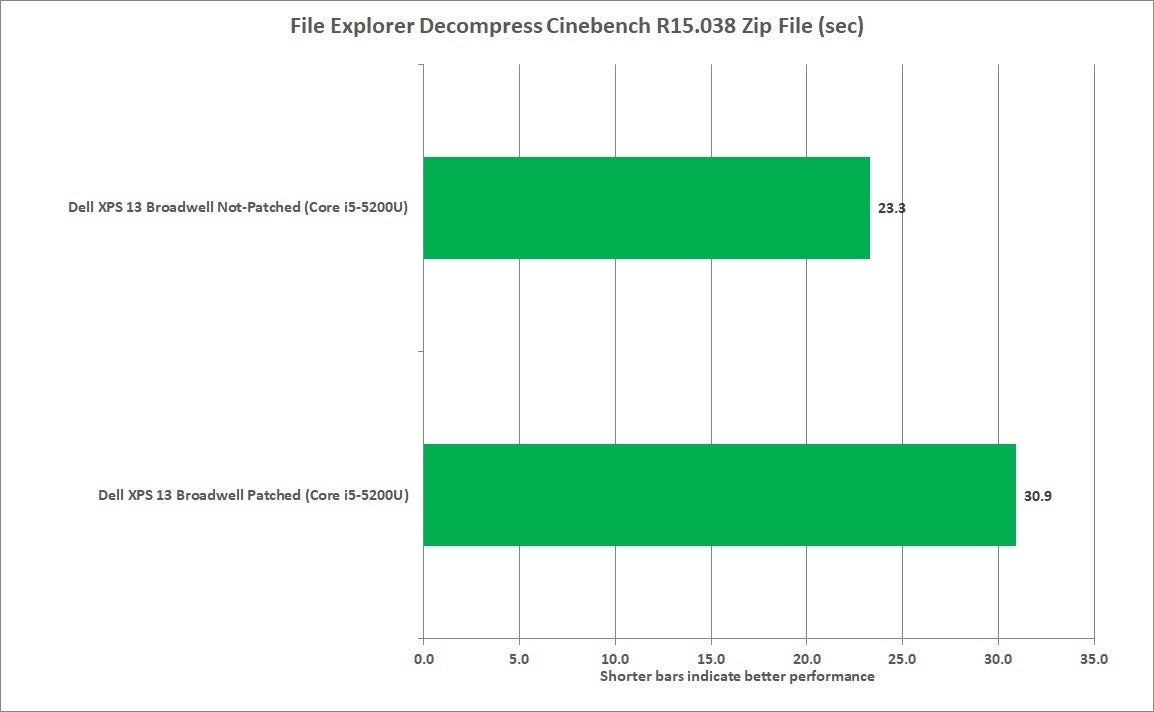

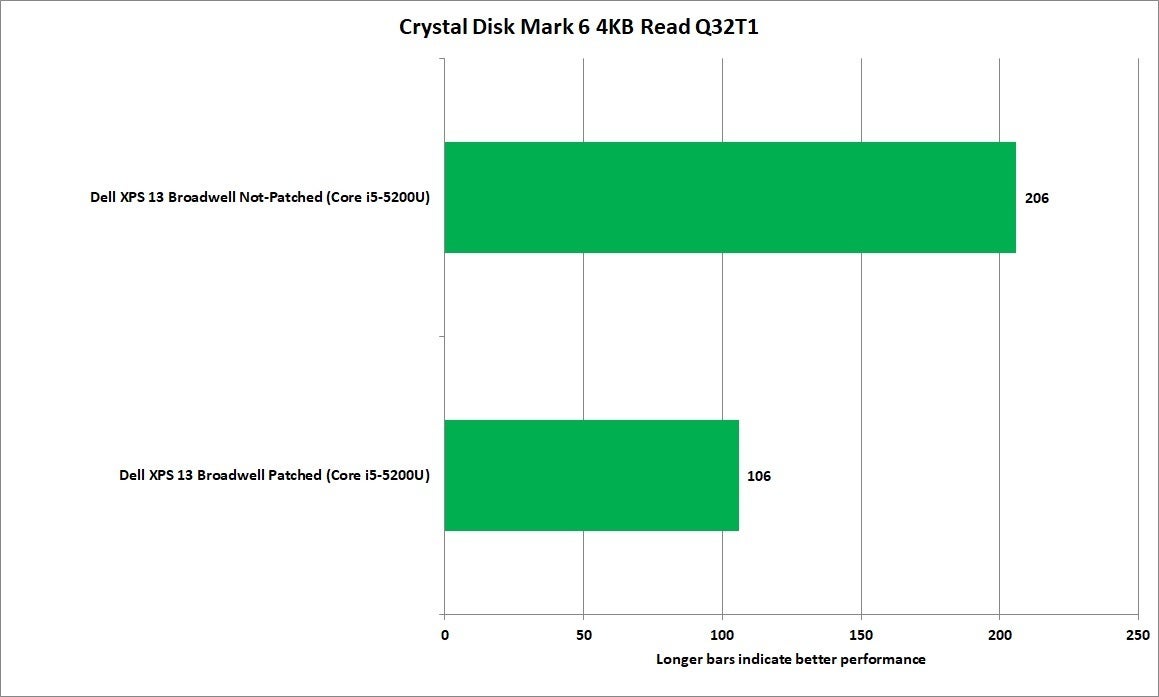

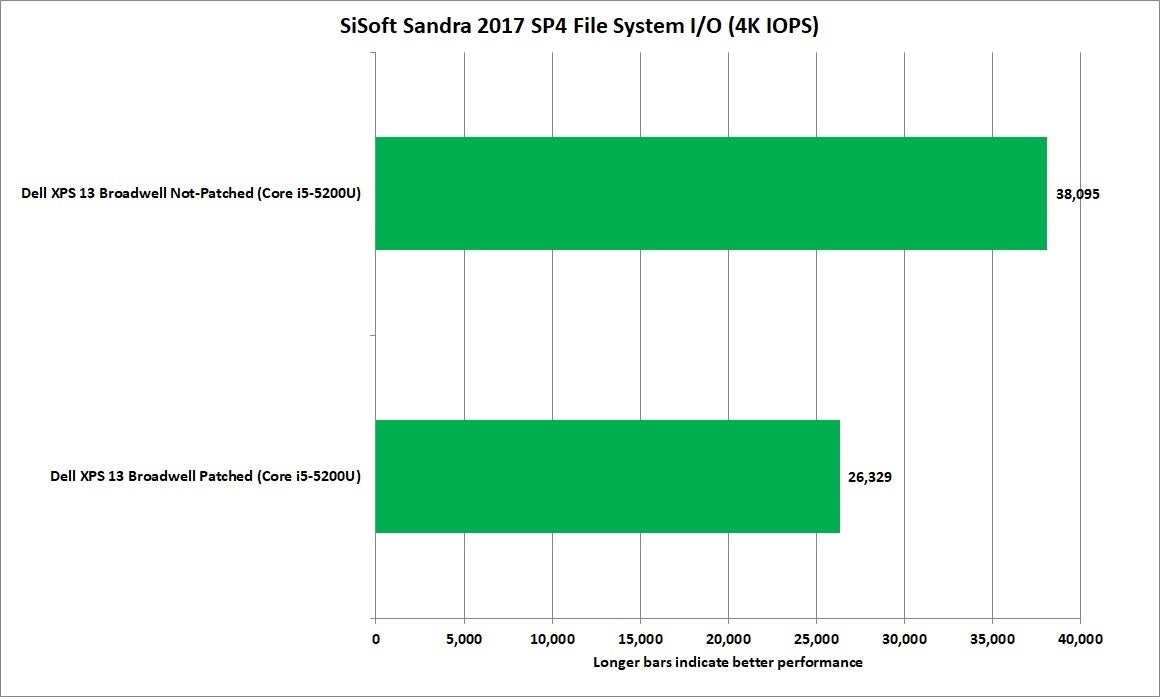

Additional performance hit comes from the fact that the Meltdown patches also slowed down data access speed to disks too.

Last edited:

I was wondering if I'd notice a difference if macOS was ever patched. It might have been, according to Apple, but I didn't notice anything slowing down on my system. Whether it be simple browsing or something more intense, like gaming.

Apple said GeekBench showed know difference after the patch. Unless I'm not reading it correctly. Which I'm probably not: https://support.apple.com/en-us/HT208394

Apple said GeekBench showed know difference after the patch. Unless I'm not reading it correctly. Which I'm probably not: https://support.apple.com/en-us/HT208394

I was wondering if I'd notice a difference if macOS was ever patched. It might have been, according to Apple, but I didn't notice anything slowing down on my system. Whether it be simple browsing or something more intense, like gaming.

Apple said GeekBench showed know difference after the patch. Unless I'm not reading it correctly. Which I'm probably not: https://support.apple.com/en-us/HT208394

Apple said GeekBench showed know difference after the patch. Unless I'm not reading it correctly. Which I'm probably not: https://support.apple.com/en-us/HT208394

Last edited:

Yeah, you wouldn't notice that in browsing or gaming. None of those rely on speculative execution or are limited by the CPU much. If you worked with lots of data or your processor started crunching numbers, that's where your processor would simply be slower than it used to be. Even loading things, unzipping etc. - everything simply takes a bit longer again.

I wish someone did this with the current gen MacBooks with Kaby Lake. Would OS have a role in this despite being a patch for hardware? I don’t think any PCs use the same CPUs exactly as Apple does but even if they did, would macOS vs Windows be a factor in the performance?

I wish someone did this with the current gen MacBooks with Kaby Lake. Would OS have a role in this despite being a patch for hardware? I don’t think any PCs use the same CPUs exactly as Apple does but even if they did, would macOS vs Windows be a factor in the performance?

Kaby Lake took a smaller hit than Broadwell, but the OS doesn't matter. Apple just ported the same fixes that were applied on Windows. The fixes are a microcode received directly from Intel that gets slapped on a CPU and nobody else has access to (not Microsoft, not Apple). The OS is just the vehicle for delivering the patch to the CPU, and the CPU behavior gets altered, and that behavior is not dependent on the OS.

Last edited:

They use the same CPUs. Intel doesn't make CPUs exclusively for Apple, Apple just purchases what's available, what every other OEM purchases. On top of that, each processor in a given generation (ie. Kaby Lake) has exactly the same core (they only manufacture one), so technically it's the exact same processor - they just receive different voltage and have different clocks/cache amounts depending on configuration, but the cores themselves are always the same, whether there are 2 or 4 or 6 of them. On top of that, the Kaby Lake core and the Coffee Lake core use exactly the same cores as Skylake, just manufactured differently, which allowed them to be clocked higher, and they got minor software tweaks that still don't alter the way the CPU processes tasks. They all work in the same way, and are as vulnerable to any potential issues, and the issues are solved in the same way for all of them.

Kaby Lake took a smaller hit than Broadwell, but the OS doesn't matter. Apple just ported the same fixes that were applied on Windows. The fixes are a microcode received directly from Intel that gets slapped on a CPU and nobody else has access to (not Microsoft, not Apple). The OS is just the vehicle for delivering the patch to the CPU, and the CPU behavior gets altered, and that behavior is not dependent on the OS.

Kaby Lake took a smaller hit than Broadwell, but the OS doesn't matter. Apple just ported the same fixes that were applied on Windows. The fixes are a microcode received directly from Intel that gets slapped on a CPU and nobody else has access to (not Microsoft, not Apple). The OS is just the vehicle for delivering the patch to the CPU, and the CPU behavior gets altered, and that behavior is not dependent on the OS.

I see. What I was trying to get at was if I had a 7700 on my MBP and a Dell had a 7700 on theirs, would their GeekBench score be the same? And if not, would the delta between pre and post-patch be the same between the two machines.

So while I get that the versions of a generation of Intel processors that Apple uses are nothing special, just labeled differently and with minor changes, I wanted to see if there was a drop in performance between OSs, keeping everything else the same. But I see you answered that.

I just want to see those benchmarks for my machine, or really just any new Mac that has had the patch applied. Yeah, I don't do any intensive tasks outside of gaming, but even then, gaming should have taken a performance hit, and I feel like I may be getting about 10 fewer FPS in a game since earlier this year. Depends on when that patch was pushed out.

I see. What I was trying to get at was if I had a 7700 on my MBP and a Dell had a 7700 on theirs, would their GeekBench score be the same? And if not, would the delta between pre and post-patch be the same between the two machines.

So while I get that the versions of a generation of Intel processors that Apple uses are nothing special, just labeled differently and with minor changes, I wanted to see if there was a drop in performance between OSs, keeping everything else the same. But I see you answered that.

I just want to see those benchmarks for my machine, or really just any new Mac that has had the patch applied. Yeah, I don't do any intensive tasks outside of gaming, but even then, gaming should have taken a performance hit, and I feel like I may be getting about 10 fewer FPS in a game since earlier this year. Depends on when that patch was pushed out.

So while I get that the versions of a generation of Intel processors that Apple uses are nothing special, just labeled differently and with minor changes, I wanted to see if there was a drop in performance between OSs, keeping everything else the same. But I see you answered that.

I just want to see those benchmarks for my machine, or really just any new Mac that has had the patch applied. Yeah, I don't do any intensive tasks outside of gaming, but even then, gaming should have taken a performance hit, and I feel like I may be getting about 10 fewer FPS in a game since earlier this year. Depends on when that patch was pushed out.

Things that suffered the most are OS independent, so apart from minor differences, both, Windows and Mac, will see a fairly similar hit to performance. The processor, for instance, regardless of the OS, gets instructed to obtain data from the hard drive/SSD. The processor, therefore, does its work before the OS gets the data back, and the work that the CPU does between receiving the request and providing the data back to the OS was one of the things that took a major hit. It's the way the CPU handles its tasks, and it happens completely outside of the OSs jurisdiction - the OS is not aware what the CPU is doing internally, it can do nothing to help or interfere, and that's the layer that's slowed down by the patches (Microcode, which was applied to the processor, not the OS). The OS only knows that it took the processor longer to provide it the requested data back. Furthermore, the processors have something called "Speculative execution", which basically tries to guess what it will be asked to do next based on what it's just accomplished, and prepare some extra work beforehand to gain some performance advantage. Intel's was very aggressive and could be fooled (a hacker could feed the processor some given stimulus to make the processor guess a certain, malicious way). Since the "guessing mechanism" is embedded in the hardware, Intel's only "fix" was to disable that part entirely, making the processor perform more grunt work in real-time instead. Fixing it for real requires a hardware redesign - a future gen chip could have a more secure speculative execution engine, like AMD does, that wouldn't have to be disabled, because it couldn't be fooled so easily, bringing back the performance advantage of speculative execution. You can't get that performance back through software, let alone the OS (which can only be optimized by sending less requests to the CPU, but doesn't have much control over how the CPU performs its tasks).

In terms of games, they barely took any hit, as the CPU activity in games is very, very simple, and is limited mostly to feeding frames to the GPU to process. That has almost nothing to do with Meltdown or Spectre. Furthermore, on laptops with low power GPUs, the FPSs in games are limited purely by the GPU, which didn't take any performance hit. Basically, even if the CPU became slower, a CPU could still provide frames to the GPU faster than the relatively weaker GPU would take to render them. Basically, a 7700 is capable of throwing 140 frames per second to a GPU. If a patch slowed down the CPU and it can only pump out 120 frames per second now, the GPU still can't render more than 30-40, so you still wouldn't notice any difference. This and the fact that games don't do much in terms of speculative execution or real time heavy data manipulation (so the CPU hit in this regard was tiny) means that you likely wouldn't notice any difference, except the loading screens could last longer.

Last edited:

The Geekbench score doesn't really show much regression regardless of the OS, as most areas hit by the patches aren't tested by Geekbench, and if some are, the performance penalties don't impact the total score in a meaningful way. For instance, if you run 200 different performance benchmarks and one of them reports a drop by 99%,, the total score will still be within 1% of its prior score. However, the part of the processor that does so poorly now is the bottleneck to many system activities. The remaining parts of the processor aren't specialized enough to efficiently cover for the aspect that took a major performance hit, and they can do it with, let's say, 30% of the efficiency. Because they still perform as well as they did, the score didn't change, but they need to spend 3 times as much time covering for the part that did take a performance hit in many real life scenarios (as also measured by other, more specialized benchmarks, or even general system tests).

Things that suffered the most are OS independent, so apart from minor differences, both, Windows and Mac, will see a fairly similar hit to performance. The processor, for instance, regardless of the OS, gets instructed to obtain data from the hard drive/SSD. The processor, therefore, does its work before the OS gets the data back, and the work that the CPU does between receiving the request and providing the data back to the OS was one of the things that took a major hit. It's the way the CPU handles its tasks, and it happens completely outside of the OSs jurisdiction - the OS is not aware what the CPU is doing internally, it can do nothing to help or interfere, and that's the layer that's slowed down by the patches (Microcode, which was applied to the processor, not the OS). The OS only knows that it took the processor longer to provide it the requested data back. Furthermore, the processors have something called "Speculative execution", which basically tries to guess what it will be asked to do next based on what it's just accomplished, and prepare some extra work beforehand to gain some performance advantage. Intel's was very aggressive and could be fooled (a hacker could feed the processor some given stimulus to make the processor guess a certain, malicious way). Since the "guessing mechanism" is embedded in the hardware, Intel's only "fix" was to disable that part entirely, making the processor perform more grunt work in real-time instead. Fixing it for real requires a hardware redesign - a future gen chip could have a more secure speculative execution engine, like AMD does, that wouldn't have to be disabled, because it couldn't be fooled so easily, bringing back the performance advantage of speculative execution. You can't get that performance back through software, let alone the OS (which can only be optimized by sending less requests to the CPU, but doesn't have much control over how the CPU performs its tasks).

In terms of games, they barely took any hit, as the CPU activity in games is very, very simple, and is limited mostly to feeding frames to the GPU to process. That has almost nothing to do with Meltdown or Spectre. Furthermore, on laptops with low power GPUs, the FPSs in games are limited purely by the GPU, which didn't take any performance hit. Basically, even if the CPU became slower, a CPU could still provide frames to the GPU faster than the relatively weaker GPU would take to render them. Basically, a 7700 is capable of throwing 140 frames per second to a GPU. If a patch slowed down the CPU and it can only pump out 120 frames per second now, the GPU still can't render more than 30-40, so you still wouldn't notice any difference. This and the fact that games don't do much in terms of speculative execution or real time heavy data manipulation (so the CPU hit in this regard was tiny) means that you likely wouldn't notice any difference, except the loading screens could last longer.

Things that suffered the most are OS independent, so apart from minor differences, both, Windows and Mac, will see a fairly similar hit to performance. The processor, for instance, regardless of the OS, gets instructed to obtain data from the hard drive/SSD. The processor, therefore, does its work before the OS gets the data back, and the work that the CPU does between receiving the request and providing the data back to the OS was one of the things that took a major hit. It's the way the CPU handles its tasks, and it happens completely outside of the OSs jurisdiction - the OS is not aware what the CPU is doing internally, it can do nothing to help or interfere, and that's the layer that's slowed down by the patches (Microcode, which was applied to the processor, not the OS). The OS only knows that it took the processor longer to provide it the requested data back. Furthermore, the processors have something called "Speculative execution", which basically tries to guess what it will be asked to do next based on what it's just accomplished, and prepare some extra work beforehand to gain some performance advantage. Intel's was very aggressive and could be fooled (a hacker could feed the processor some given stimulus to make the processor guess a certain, malicious way). Since the "guessing mechanism" is embedded in the hardware, Intel's only "fix" was to disable that part entirely, making the processor perform more grunt work in real-time instead. Fixing it for real requires a hardware redesign - a future gen chip could have a more secure speculative execution engine, like AMD does, that wouldn't have to be disabled, because it couldn't be fooled so easily, bringing back the performance advantage of speculative execution. You can't get that performance back through software, let alone the OS (which can only be optimized by sending less requests to the CPU, but doesn't have much control over how the CPU performs its tasks).

In terms of games, they barely took any hit, as the CPU activity in games is very, very simple, and is limited mostly to feeding frames to the GPU to process. That has almost nothing to do with Meltdown or Spectre. Furthermore, on laptops with low power GPUs, the FPSs in games are limited purely by the GPU, which didn't take any performance hit. Basically, even if the CPU became slower, a CPU could still provide frames to the GPU faster than the relatively weaker GPU would take to render them. Basically, a 7700 is capable of throwing 140 frames per second to a GPU. If a patch slowed down the CPU and it can only pump out 120 frames per second now, the GPU still can't render more than 30-40, so you still wouldn't notice any difference. This and the fact that games don't do much in terms of speculative execution or real time heavy data manipulation (so the CPU hit in this regard was tiny) means that you likely wouldn't notice any difference, except the loading screens could last longer.

I gotcha. I'm thinking maybe my drop in frames might be due to using an external monitor, albeit at the same resolution and scaling as before. That would make sense too if the GPU was a tad bit more stressed than usual by being connected to a monitor vs using my MBP's screen.

And there's no way to un-do the patch on the user's end, right? Say if someone wants to risk it all but gain the performance back? I wouldn't be against it. I still have the December 2016 security patch on my S7 because Sprint and/or Samsung are incompetent tits and can't recognize my phone is stuck on a beta. Their beta.

I gotcha. I'm thinking maybe my drop in frames might be due to using an external monitor, albeit at the same resolution and scaling as before. That would make sense too if the GPU was a tad bit more stressed than usual by being connected to a monitor vs using my MBP's screen.

And there's no way to un-do the patch on the user's end, right? Say if someone wants to risk it all but gain the performance back? I wouldn't be against it. I still have the December 2016 security patch on my S7 because Sprint and/or Samsung are incompetent tits and can't recognize my phone is stuck on a beta. Their beta.

And there's no way to un-do the patch on the user's end, right? Say if someone wants to risk it all but gain the performance back? I wouldn't be against it. I still have the December 2016 security patch on my S7 because Sprint and/or Samsung are incompetent tits and can't recognize my phone is stuck on a beta. Their beta.

I'm not sure about Mac, but I assume that it updated the bios together with the microcode, so that can't be undone.

On Windows, you can disable some of the protection through https://www.grc.com/inspectre.htm, for instance. I think the security benefits are needed though. Since Meltdown and Spectre are now public, they might be used more frequently from now on.

If I'm using my monitor, I have the laptop closed 99% of the time. I don't use it as a second display very often and definitely not while gaming.

The game doesn't look all that different but I do have a tiny counter in the top corner that shows ping and FPS and it is in the low 40s on fully maxed out settings whereas before it was 50-53fps. The latter was on my MBP screen, the low 40s are now on the monitor.

It's not a big deal but if you're someone that is easily alarmed by numbers, regardless of what they mean, it is a bit puzzling why it would drop so much. Maybe I did inadvertently change some settings, or they were reset in the many weekly updates DOTA has.

I might just run it at all-low settings for the hell of it. I think I get like 130+ fps but the details are obviously Windows 95-esque.

The game doesn't look all that different but I do have a tiny counter in the top corner that shows ping and FPS and it is in the low 40s on fully maxed out settings whereas before it was 50-53fps. The latter was on my MBP screen, the low 40s are now on the monitor.

It's not a big deal but if you're someone that is easily alarmed by numbers, regardless of what they mean, it is a bit puzzling why it would drop so much. Maybe I did inadvertently change some settings, or they were reset in the many weekly updates DOTA has.

I might just run it at all-low settings for the hell of it. I think I get like 130+ fps but the details are obviously Windows 95-esque.

The Sprint variant S7 got Oreo two days ago. My mom's phone got it and it was downloaded automatically but I don't think she accepted to install it yet. Still nothing on mine because Samsung or Sprint is too dumb to put older versions of Android on some sort of checklist on their network to get the prompt to update. Still on that Nougat beta, not even the final release lol

I contacted Samsung and they told me to use Smart Switch but it does not work well on macOS, or at least two of my Macs in the past three years I've tried using it. It recognizes my device being connected but gives some error and force closes. Then the rep said to take it to a Best Buy and look for a Samsung Experience counter. He said the rep would be able to flash it there. So I have to sit in the store like an asshole for 90 mins to get it flashed?

I'm thinking about booting up our old windows machine from 2005 and using ODIN on that, but I also forgot the directions on how to use it. And I have to find the factory image for Nougat on Sprint. So much work.

That's why I stopped using betas. I didn't do the iOS 12 beta ( I did on my Air, but not my Pro) and didn't do the Mojave beta on my MBP. Fuck that noise.

I contacted Samsung and they told me to use Smart Switch but it does not work well on macOS, or at least two of my Macs in the past three years I've tried using it. It recognizes my device being connected but gives some error and force closes. Then the rep said to take it to a Best Buy and look for a Samsung Experience counter. He said the rep would be able to flash it there. So I have to sit in the store like an asshole for 90 mins to get it flashed?

I'm thinking about booting up our old windows machine from 2005 and using ODIN on that, but I also forgot the directions on how to use it. And I have to find the factory image for Nougat on Sprint. So much work.

That's why I stopped using betas. I didn't do the iOS 12 beta ( I did on my Air, but not my Pro) and didn't do the Mojave beta on my MBP. Fuck that noise.

And one hour after my last post, I went ahead and used my dad's XPS 15 to get ODIN and flash the stock Nougat from Sprint, and just upgraded from there until I got to Oreo.

As expected, it changed fuck all but it did allow some apps of mine to update. There are plenty of Google apps that had a minimum requirement of 8.0 or higher and they all just got updated in a batch.

Of course it factory reset my phone and Smart Switch, which I also used on the XPS to do a backup, seemed to restore maybe 40 of my 100+ apps.

Ah well, Sprint recognizes my phone as legit now, though, so I'm happy. Shouldn't be missing any more updates like I did for the past 18 months.

As expected, it changed fuck all but it did allow some apps of mine to update. There are plenty of Google apps that had a minimum requirement of 8.0 or higher and they all just got updated in a batch.

Of course it factory reset my phone and Smart Switch, which I also used on the XPS to do a backup, seemed to restore maybe 40 of my 100+ apps.

Ah well, Sprint recognizes my phone as legit now, though, so I'm happy. Shouldn't be missing any more updates like I did for the past 18 months.

So, for many server and database purposes, Intel's chips will now have half the threads, and laptop low-power i7s are relegated to mere dual-cores, a desktop i7 becomes a 100$ cheaper i5:

https://www.tomshardware.com/news/openbsd-disables-intel-hyper-threading-spectre,37332.html

In the server market, this will be a tremendous hit to Intel. The new AMD Threadripper is coming with 64 threads vs Intel's best 10 thousand dollars chip being relegated to 28.

https://www.tomshardware.com/news/openbsd-disables-intel-hyper-threading-spectre,37332.html

In the server market, this will be a tremendous hit to Intel. The new AMD Threadripper is coming with 64 threads vs Intel's best 10 thousand dollars chip being relegated to 28.

Last edited:

Donate

Any donations will be used to help pay for the site costs, and anything donated above will be donated to C-Dub's son on behalf of this community.